AlphaFold 2: DeepMind's structural biology breakthrough

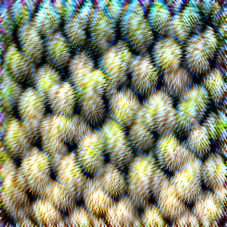

AlphaFold’s predictions vs. the experimentally-determined shapes of two CASP14 proteins.

DeepMind’s AlphaFold 2 is a major protein folding breakthrough. Protein folding is a problem in structural biology where, given the one-dimensional RNA sequence of a protein, a computational model has to predict what three-dimensional structure the protein “folds” itself into. This structure is much more difficult to determine experimentally than the RNA sequence, but it’s essential for understanding how the protein interacts with other machinery inside cells. In turn, this can give insights into the inner workings of diseases — “including cancer, dementia and even infectious diseases such as COVID-19” — and how to fight them.

Biennially since 1994, the Critical Assessment of Techniques for Protein Structure Prediction (CASP) has determined the state of the art in computational models for protein folding using a blind test. Research groups are presented (only) with the RNA sequences of about 100 proteins whose shapes have recently been experimentally determined. They blindly predict these shapes using their computational models and submit them to CASP to be evaluated with a Global Distance Test (GDT) score, which roughly corresponds to how far each bit of the protein is from where it’s supposed to be. GDT scores range from 0 to 100, and a model that scores at least 90 across different proteins would be considered good enough to be useful to science (“competitive with results obtained from experimental methods”).

Before CASP13 in 2018, no model had ever scored significantly above 40 GDT. That year, the first version of AlphaFold came in at nearly 60 GDT — already “stunning” at the time (see DT #21). At CASP14 this year, AlphaFold 2 blew its previous results out of the water and achieved a median score of 92.4 GDT across all targets. This was high enough for CASP to declare the problem as “solved” in their press release and to start talking about new challenges for determining the shape of multi-protein complexes.

I’ve waited a bit to write about AlphaFold 2 until the hype died down because, oh boy, was there a lot of hype. DeepMind released a slick video about the team’s process, their results were covered with glowing features in Nature and The New York Times, and high praise came even from the leaders of DeepMind’s biggest competitors, including OpenAI’s Ilya Sutskever and Stanford HAI’s Fei-Fei Li. It was a pretty exciting few days on ML twitter.

Columbia University’s Mohammed AlQuraishi, who has been working on protein folding for over a decade, was one of the first people to break the CASP14 news. His blog post about CASP13 and AlphaFold 1 was also widely circulated back in 2018, so a lot of people in the field were interested in what he’d have to say this year. Last week, after the hype died down a bit, AlQuraishi published his perspective on AlphaFold 2. He summarized it by saying “it feels like one’s child has left home:” AF2 got results he did not expect to see until the end of this decade, even when takin into account AF1 — bittersweet for someone whose lab has also been working on this same problem for a long time.

AlQuraishi is overall extremely positive about DeepMind’s results here, but he does express disappointment at their “falling short of the standards of academic communication” — the lab has so far been much more secretive about AF2 than it was about AF1 (which is open-source). AlQuraishi’s post is very long and technical, but if you want to know exactly how impressive AlphaFold 2 is, learn the basics of how it works, read about its potential applications in broader biology, or see some of the hot takes against it debunked, the post is definitely worth the ~75 minutes of your time. (I always find it energizing to see someone excitedly explain a big advancement in their field that they did not directly work on; here’s the link again.)

I personally also can’t wait to see the first practical applications of AlphaFold, which I expect we’ll start to see DeepMind talk about in the coming years. (Hopefully!) For one, they’ve already released AlphaFold’s predictions for some proteins associated with COVID-19.

Google AI's ethics crisis

Google AI is in the middle of an ethics crisis. Timnit Gebru, the AI ethics researcher behind Gender Shades (see DT #42), Datasheets for Datasets (#41), and much more, got pushed out of the company after a series of conflicts. Karen Hao for MIT Technology Review:

A series of tweets, leaked emails, and media articles showed that Gebru’s exit was the culmination of a conflict over [a critical] paper she co-authored. Jeff Dean, the head of Google AI, told colleagues in an internal email (which he has since put online) that the paper “didn’t meet our bar for publication” and that Gebru had said she would resign unless Google met a number of conditions, which it was unwilling to meet. Gebru tweeted that she had asked to negotiate “a last date” for her employment after she got back from vacation. She was cut off from her corporate email account before her return.

See Casey Newton’s coverage on his Platformer newsletter for both Gebru’s and Jeff Dean’s emails (and here for his extended statement). This story unfolded over the past week and is probably far from over, but from everything I’ve read so far — which is a __lot, hence this email hitting your inbox a bit later than usual — I think think Google management made the wrong call here. Their statement on the matter focuses on missing references in Gebru’s paper, but as Google Brain Montreal researcher Nicolas Le Roux points out:

… [The] easiest way to discriminate is to make stringent rules, then to decide when and for whom to enforce them. My submissions were always checked for disclosure of sensitive material, never for the quality of the literature review.

This is echoed by a top comment on HackerNews. From Gebru’s email, it sounds like frustrations had been building up for some time, and that the lack of transparency surrounding the internal rejection of this paper was simply the final straw. I think it would’ve been more productive for management to start a dialog with Gebru here — forcing a retraction, “accepting her resignation” immediately and then cutting off her email only served to escalate the situation.

Gebru’s research on the biases of large (compute-intensive) vision and language models is much harder to do without the resources of a large company like Google. This is a problem that academic ethics researchers often run into; OpenAI’s Jack Clark, who gave feedback on Gebru’s paper, has also pointed this out. I always found it admirable that Google AI, as a research organization, intellectually had the space for voices like Gebru’s to critically investigate these things. It’s a shame that it was not able to sustain an environment in which this could be fostered.

In the end, beside the ethical issues, I think Google’s handling of this situation was also a big strategic misstep. 1500 Googlers and 2100 others have signed an open letter supporting Gebru. Researchers from UC Berkeley and the University of Washington said this will have “a chilling effect” on the field. Apple and Twitter are publicly poaching Google’s AI ethics researchers. Even mainstream outlets like The Washington Post and The New York Times have picked up the story. In the week leading up to NeurIPS and the Black in AI workshop there, is this a better outcome for Google AI than letting an internal researcher submit a conference paper critical of large language models?

Photoshop's Neural Filters

Light direction is one of many new AI-powered features in Photoshop; in the middle picture, the light source is on the left; in the right picture, it’s moved to the right.

Adobe’s latest Photoshop release is jam-packed with AI-powered features. The pitch, by product manager Pam Clark:

You already rely on artificial intelligence features in Photoshop to speed your work every day like Select Subject, Object Selection Tool, Content-Aware Fill, Curvature Pen Tool, many of the font features, and more. Our goal is to systematically replace time-intensive steps with smart, automated technology wherever possible. With the addition of these five major new breakthroughs, you can free yourself from the mundane, non-creative tasks and focus on what matters most – your creativity.

Adobe is branding the most exciting of these new features as Neural Filters : neural-network-powered image manipulations that are parameterized by sliders in the Photoshop UI. Some of them automate tasks that were previously very labor-intensive, while others enable changes that were previously impossible. Here’s a few of both:

- Style transfer: apply one photo’s style to another, like the classic “make this look like a Picasso / Van Gogh / Monet.”

- Smart portraits: subtly change a photo subject’s age, expression, gaze direction, pose, hair thickness, etc.

- Colorize: infer colors for black-and-white photos based on their contents.

- JPEG Artifacts Removal: smooth out the blocky artifacts that occur on patches of JPEG-compressed photos.

These all run on-device and came out of a collaboration between Adobe Research and NVIDIA, implying they’re best suited to machines with beefy GPUs — not surprising. However, the blog post is a little vague in about the specifics here (“performance is particularly fast on desktops and notebooks with graphics acceleration”), so I wonder whether this Neural Filters is also optimized for any other AI accelerator chips that Adobe can’t mention yet. In particular, Apple recently showed off their new A14 chips that feature a much faster Neural Engine. These chips launched in the latest iPhones and iPads but will also be in a new line of non-Intel “Apple Silicon” Macs, rumored to be announced next month — what are the chances that Apple will boast about the performance of Neural Filters on the Neural Engine during the presentation? I’d say pretty big. (Maybe worthy of a Ricky, even?)

Anyway, this Photoshop release is exactly the kind of productized AI that I started DT to cover: advanced machine learning models — that only a few years ago were just cool demos at conferences — wrapped up in intuitive UIs that fit into users’ existing workflows. It’s now just as easy to tweak the intensity of a smile or the direction of a gaze in a portrait photo as it is to manipulate its hue or brightness. That’s pretty amazing.

OpenAI and Microsoft: GPT-3 and beyond

OpenAI is exclusively licensing GPT-3 to Microsoft. What does this mean for their future relationship?

GPT-3 is OpenAI’s latest gargantuan language model (see DT #42) that’s uniquely capable of performing many different “text-in, text-out” tasks — demos range from imitating famous writers to generating code (#44) — without needing to be fine-tuned: its crazy scale makes it a few-shot learner.

In July 2019, OpenAI announced it got a $1 billion investment from Microsoft. Back then, this raised some eyebrows in the (academic) machine learning community, which can sometimes be a bit allergic to the commercialization of AI (#19). The exact terms of the investment were never disclosed, but some key elements of the deal were. Tom Simonite for WIRED:

Most interesting bit of the OpenAI announcement: “we intend to license some of our pre-AGI technologies, with Microsoft becoming our preferred partner.”

Now, a year and a bit later, that’s exactly what happened. From the OpenAI blog:

In addition to offering GPT-3 and future models via the OpenAI API, and as part of a multiyear partnership announced last year, OpenAI has agreed to license GPT-3 to Microsoft for their own products and services.

What does that mean? Nick Statt for The Verge:

A Microsoft spokesperson tells The Verge that its exclusive license gives it unique access to the underlying code of GPT-3, which contains technical advancements it hopes to integrate into its products and services.

In their blog post, Microsoft pitches this as a way to “expand [their] Azure-powered AI platform in a way that democratizes AI technology,” to which the community again reacted negatively: if you want to democratize AI, why not just open-source GPT-3’s code and training data?* I agree that “democratizing” is a bit of a stretch, but I think there’s a much more interesting discussion to be had here than the one on a self-congratulatory word choice in a corporate press release. Perhaps ironically, that discussion also starts from overanalyzing another few words in that very same press release.

According to Microsoft’s blog post about the licensing deal, GPT-3 “is trained on Azure’s AI supercomputer.” I wonder if that means OpenAI is now using Microsoft’s open-source DeepSpeed library (#34) to train its GPT models. DeepSpeed is a library for distributed training of enormous ML models that has specific features to support training large Transformers; Microsoft Research claimed in May that it’s capable of training models with up to 170 billion parameters (#40). GPT-3 is a 175-billion-parameter Transformer that was released in June, just one month later. That seems unlikely to be a coincidence, and Microsoft’s latest DeepSpeed update (#49) even includes some experimental work using the GPT-3 architecture.

So this suggests that the partnership goes beyond just the exchange of Microsoft’s money and compute for OpenAI’s trained models and ML brand strength (an exchange of cloud for clout, if you will) that we previously expected. Are the companies actually also deeply collaborating on ML and systems engineering research? I’d love to find out.

If so, this could be an early indication that Microsoft — who I’m sure is at least a little bit envious of Google’s ownership of DeepMind — will eventually want to acquire OpenAI. And it could be a great fit. Looking at Microsoft’s recent acquisition history, it has so far let GitHub (which it acquired two years ago) continue to operate largely autonomously. This makes it an attractive potential parent company for OpenAI: the lab probably wouldn’t have to give up too much of its independence under Microsoft’s stewardship. So unless OpenAI actually invents and monetizes some form of artificial general intelligence (AGI) in the next five to ten years — which I don’t think they will — I wouldn’t be surprised if they end up becoming Microsoft’s DeepMind.

* One big reason for not open-sourcing GPT-3’s code and data is security; see my coverage of OpenAI’s staged release strategy for GPT-2 (#8, #13, #22, #27).

Autonomous trucks will be the first big self-driving market

Autonomous trucking is where I think self-driving vehicle technology will have its first big impact , much before e.g. the taxi or ride sharing industries. Long-distance highway truck driving — with hubs at city borders where human drivers take over — is a much simpler problem to solve than inner-city taxi driving. Beyond the obvious lower complexity of not having to deal with traffic lights, small streets and pedestrians, a specific highway route between two high-value hubs can also be mapped in high detail much more economically than an ever-changing city center could. And, of course, self-driving trucks won’t have the 11-hour-per-day driving safety limit imposed on human drivers. This all makes for quite an attractive pitch when taken together.

In recent news, Jennifer Smith at the Wall Street Journal reported that startup Ike Robotics has reservations for its first 1,000 heavy-duty autonomous trucks, from “transport operators Ryder System Inc., NFI Industries Inc. and the U.S. supply-chain arm of German logistics giant Deutsche Post AG.”

Tapping into big carriers’ logistics networks and operational expertise means Ike can focus on the technology piece—systems engineering, safety and technical challenges such as computer vision—said Chief Executive Alden Woodrow.

“They are going to help us make sure we build the right product, and we are going to help them prepare to adopt it and be successful,” said Mr. Woodrow, who worked on self-driving trucks at Uber Technologies Inc. before co-founding Ike in 2018.

Unlike rival startups, Ike wants to be a software-as-a-service provider of self-driving tech for existing logistics operators, instead of becoming one themselves. It’ll be interesting to see how well this business model works out when competitors start offering a similar service — the biggest question is how easy or hard it’ll be for an operator to swap one self-driving SaaS out for another. If it’s easy, that’ll make for a very competitive space.

(On the disruption side: there are nearly 3 million truck drivers in the United States alone, so widespread automation here can be quite impactful. Until today, I thought trucking was the biggest profession in most US states because of this 2015 NPR article, but apparently that was based on wrongly interpreted statistics; the most common job is in retail — no surprise there. Nonetheless, trucking is currently a major profession. A decade from now it may no longer be.)