AR Face Doodles

Yours truly, now with mustache, beard, and brows.

Cyril Diagne, resident artist/designer/programmer at Google Arts & Culture, built AR Face Doodle —a website that lets you draw on your face in 3D. It’s powered by MediaPipe Facemesh, “a lightweight machine learning pipeline predicting 486 3D facial landmarks to infer the approximate surface geometry of a human face,” which can run real-time in browsers using TensorFlow.js. The site lets you draw squiggles on top of your selfie camera feed and then locks them to the closest point on your face. As you move your face around—or even scrunch it up—the doodles stick to their places and move around in 3D remarkably well. AR Face Doodle should work on any modern browser; you can also check out the site’s code on GitHub: cyrildiagne/ar-facedoodle.

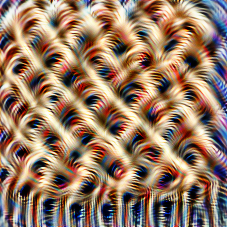

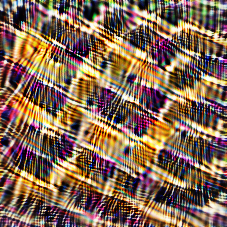

Cloudflare's ML-powered bot blocking

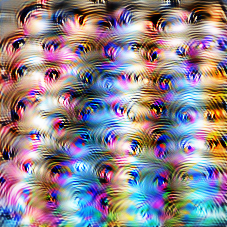

Cloudflare’s overview of good and bad bots.

Web infrastructure company Cloudflare is using machine learning to block “bad bots” from visiting their customers’ websites. Across the internet, malicious bots are used for content scraping, spam posting, credit card surfing, inventory hoarding, and much more. Bad bots account for an astounding 37% of internet traffic visible to Cloudflare (humans are responsible for 60%).

To block these bots, Cloudflare built a scoring system based on five detection mechanisms: machine learning, a heuristics engine, behavior analysis, verified bots lists, and JavaScript fingerprinting. Based on these mechanisms, the system assigns a score of 0 (probably a bot) to 100 (probably a human) to each request passing through Cloudflare—about 11 million requests per second, that is. These scores are exposed as fields for Firewall Rules, where site admins can use them in conjunction with other properties to decide whether the request should pass through to their web servers or be blocked.

Machine learning is responsible for 83% of detection mechanisms. Because support for categorical features and inference speed were key requirements, Cloudflare went with gradient-boosted decision trees as their model of choice (implemented using CatBoost). They run at about 50 microseconds per inference, which is fast enough to enable some cool extras. For example, multiple models can run in shadow mode (logging their results but not influencing blocking decisions), so that Cloudflare engineers can evaluate their performance on real-world data before deploying them into the Bot Management System.

Alex Bocharov wrote about the development of this system for the Cloudflare blog. It’s a great read on adding an AI-powered feature to a larger product offering, with good coverage of all the tradeoffs involved in that process.

NVIDIA's Inception climate AI startups

For the 50th anniversary of Earth Day, Isha Salin wrote about three startups using deep learning for environmental monitoring, which are all part of NVIDIA’s Inception program for startups. Here’s what they do.

Orbital Insight maps deforestation to aid the Global Forest Watch, similar to the work being done by and 20tree.ai (DT #25) and David Dao’s lab at ETH Zurich (DT #28):

The tool can also help companies assess the risk of deforestation in their supply chains. Commodities like palm oil have driven widespread deforestation in Southeast Asia, leading several producers to pledge to achieve zero net deforestation in their supply chains this year.

3vGeomatics monitors the thawing of permafrost on the Canadian Arctic in a project for the Canadian Space Agency. Why it matters:

As much as 70 percent of permafrost could melt by 2100, releasing massive amounts of carbon into the atmosphere. Climate change-induced permafrost thaw also causes landslides and erosion that threaten communities and critical infrastructure.

Azevea is monitoring construction around oil and gas pipelines to detect construction activities that may damage the pipes and cause leaks:

The U.S. oil and gas industry leaks an estimated 13 million metric tons of methane into the atmosphere each year — much of which is preventable. One of the leading sources is excavation damage caused by third parties, unaware that they’re digging over a natural gas pipeline.

I’m always a bit hesitant to cover ML startups that work with oil and gas companies, but I think in this case their work is a net benefit. For details about the GPU tech being used by all these projects, see Salin’s full post.

Bias reductions in Google Translate

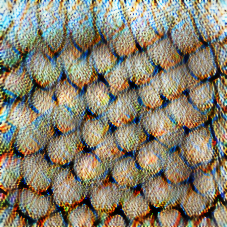

Gender-specific translations from Persian, Finnish, and Hungarian in the new Google Translate.

Google is continuing to reduce gender bias in its Translate service. Previously, it might translate “o bir doktor” in Turkish, a language that does not use gendered pronouns, to “he is a doctor”—assuming doctors are always men—and “o bir hemşire” to “she is a nurse”—assuming that nurses are always women. This is a very common example of ML bias, to the point that it’s covered in introductory machine translation courses like the one I took in Edinburgh last year. That doesn’t mean it’s easy to solve, though.

Back in December 2018, Google took a first step toward reducing these biases by providing gender-specific translations in Translate for Turkish-to-English phrase translations, like the example above, and for single word translations from English to French, Italian, Portuguese, and Spanish (DT #3). But as they worked to expand this into more languages, they ran into scalability issues: only 40% of eligible queries were actually showing gender-specific translations.

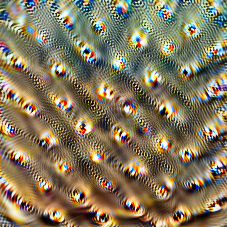

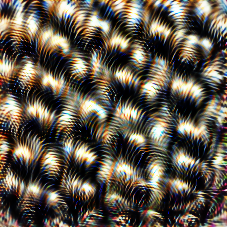

Google’s original and new approaches to gender-specific translations.

They’ve now overhauled the system: instead of attempting to detect whether a query is gender-neutral and then generating two gender-specific translations, it now generates a default translation and, if this translation is indeed gendered, also rewrites it to an opposite-gendered alternative. This rewriter uses a custom dataset to “reliably produce the requested masculine or feminine rewrites 99% of the time.” As before, the UI shows both alternatives to the user.

Another interesting aspect of this update is how they evaluate the overall system:

We also devised a new method of evaluation, named bias reduction, which measures the relative reduction of bias between the new translation system and the existing system. Here “bias” is defined as making a gender choice in the translation that is unspecified in the source. For example, if the current system is biased 90% of the time and the new system is biased 45% of the time, this results in a 50% relative bias reduction. Using this metric, the new approach results in a bias reduction of ≥90% for translations from Hungarian, Finnish and Persian-to-English. The bias reduction of the existing Turkish-to-English system improved from 60% to 95% with the new approach. Our system triggers gender-specific translations with an average precision of 97% (i.e., when we decide to show gender-specific translations we’re right 97% of the time).

The standard academic metrics (recall and average precision) did not answer the most important question about the two different approaches, so the developers came up with a new metric specifically to evaluate relative bias reduction. Beyond machine translation, this is a nice takeaway for productized AI in general: building the infrastructure and metrics to measure how your ML system behaves in its production environment is at least as important as designing the model itself.

In the December 2018 post announcing gender-specific translations, the authors mention that one next step is also addressing non-binary gender in translations; this update does not mention that, but I hope it’s still on the roadmap. Either way, it’s commendable that Google has continued pushing on this even after the story has been out of the media for a while now.

Report: Toward Trustworthy AI Development

That’s a lot of authors and institutions.

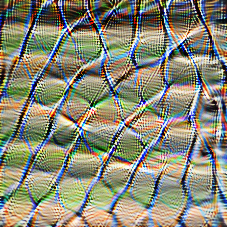

A large coalition of big-name ML researchers and institutions published Toward Trustworthy AI Development: Mechanisms for Supporting Verifiable Claims. The 80-page report recognizes “that existing regulations and norms in industry and academia are insufficient to ensure responsible AI development” and presents a set of recommendations for providing evidence of the “safety, security, fairness, and privacy protection of AI systems.” Specifically, they outline two types of mechanisms:

- Mechanisms for AI developers to substantiate claims about their AI systems—going beyond just saying a system is “privacy-preserving” in the abstract, for example.

- Mechanisms that users, policy makers, and regulators can use to increase the specificity and diversity of demands they make to AI developers—again, going beyond abstract, unenforceable requirements.

The two-page executive summary and single-page list of recommendations (categorized across institutions, software, and hardware) are certainly worth a read for anyone who is to some extent involved in AI development, from researchers to regulators:

Recommendations from the report by Brundage et al. (2020).

On the software side, I found the audit trail recommendation especially interesting. The authors state the problem as such:

AI systems lack traceable logs of steps taken in problem-definition, design, development, and operation, leading to a lack of accountability for subsequent claims about those systems’ properties and impacts.

Solving this will have go far beyond just saving a git commit history that traces the development of a model. For the data collection, testing, deployment, and operational aspects, there are no reporting or verification standards in widespread use yet.

I don’t think these standards can be sensibly defined for “AI” at large, so they’ll have to be implemented on an industry-by-industry basis. There are a whole different set of things to think about for self-driving cars than for social media auto-moderation, for example.

(Also see OpenAI’s short write-up of the report.)